X Space Recap - IoT, Telemetry, Sensor & Machine Data w/ Filecoin Foundation IoTeX, WeatherXM, and DIMO

"NOAA allocates roughly $1.2 billion each year to gather weather data. WeatherXM can deliver comparable coverage for about 1% of that cost."

With this comparison, WeatherXM Co-Founder Manos Nikiforakis set the tone for Our X Space, “IoT, Telemetry, Sensor & Machine Data”, hosted alongside Filecoin Foundation.

For July’s Space, we brought together:

Manos Nikiforakis (Co-Founder, WeatherXM)

Yevgeny Khessin (CTO and Co-Founder, DIMO)

Aaron Bassi (Head of Product, IoTeX)

Over 60 minutes the group examined how decentralised physical infrastructure (DePIN) is reshaping data markets. They walked through proof chains that begin at the sensor, incentive models that discourage low-quality deployments, and programmes that reward EV owners for sharing telemetry.

DePIN is really on the rise. Networks are operating, real users are earning rewards, and real enterprises are consuming the data.

The following excerpts highlight the key moments from our recent X Space, diving deep into the insights shared.

Turning vehicle telemetry into real savings

Drivers who join DIMO first mint a "vehicle identity", a smart-contract wallet that stores their car's data and keeps them in charge of access.

From there they can release selected metrics to approved parties. A leading example is usage-based insurance: share only charging-session records and receive an annual rebate.

As DIMO's CTO Yevgeny Khessin explained, the programme with insurer Royal pays "$200 back per year" for that data.

Each transfer is cryptographically signed, so ownership stays with the driver while the insurer receives verifiable telemetry.

This demonstrates how DePIN turns dormant signals into economic value—and why the underlying infrastructure matters.

The ledger as the "handshake" layer

The role of the ledger becomes clear when you consider interoperability requirements. It serves as the shared source of truth for composability between systems. New patterns emerge, such as vehicle-to-vehicle payments.

In a web2 world, that would have required coordination across Visa and multiple automakers. On a blockchain, the payment is straightforward because the parties meet on the same ledger.

The same "handshake" applies to data sharing. Yevgeny went on to highlight that using the ledger as the agreement layer, with private-key permissions, creates a system where only the owner can authorise access. This addresses recent controversies over car data being shared without clear consent.

Khessin summarised the benefits as ownership, interoperability, and verifiability. Looking ahead, machines will need to verify one another before acting on any message. A public ledger provides the audit trail for those decisions.

"Using a ledger as the source of truth for interoperability ... allows you to enable use cases that just weren't possible before."

This sets up the shift from isolated "data markets" to the protocol plumbing: identity, permissions, and payments negotiated on a neutral, verifiable layer.

Building the infrastructure: IoTeX's physical intelligence ecosystem

IoTeX positions itself as the connector between real-world data and AI systems through "realms". These are specialized subnets that allow DePIN projects to reward users with multiple tokens: their native token, realm-specific tokens, and IoTeX tokens.

Example: a mobility realm can reward a driver in both the project's token and an IoTeX realm token for verified trips, with proofs carried from device to contract.

The technical challenge is ensuring data integrity throughout the entire lifecycle: from collection at the device, through off-chain processing, to smart contract verification on-chain. Each step requires cryptographic proofs that the data hasn't been tampered with.

IoTeX tracks this entire chain to ensure enterprise customers can trust the data they consume. This becomes critical as AI systems increasingly depend on real-world inputs for training and decision-making.

Incentives as quality control: finding shadows, fixing stations

WeatherXM demonstrates how economic incentives can drive data quality at scale. They tie rewards directly to measurable performance metrics.

They start at the device level. Stations sign each sensor payload with secure elements embedded during manufacturing. Location verification uses hardened GPS modules paired to the main controller. This makes spoofing significantly more difficult and lets the network trace readings back to specific units and coordinates.

Deployment matters too. Operators submit site photos for human review, with machine vision assistance planned for the future.

Then comes continuous quality assurance. WeatherXM analyses time-series data to detect poor placements. Repeating shadows signal nearby obstacles, not moving cloud cover. Similar pattern recognition applies to wind and other environmental signals.

The incentive mechanism is direct: poor deployments earn reduced rewards. The system writes on-chain attestations of station quality over time, making performance transparent to participants and customers.

“We analyse time-series data to identify repeating shadows. That signals a placement issue. Poorly deployed stations receive fewer rewards, and we record quality on-chain.”

This approach addresses a persistent problem with legacy sensor networks. Many government and corporate deployments struggle with maintenance costs and quality control. Making quality transparent filters good stations from bad without requiring a central gatekeeper.

WeatherXM extends this concept to forecast accuracy, tracking performance across different providers and planning to move those metrics on-chain as well.

AI agents and the future of automated data markets

Manos described WeatherXM's implementation of an MCP client that allows AI agents to request weather data without navigating traditional API documentation.

More significantly, they're exploring x402 protocols that enable agents to make autonomous payments. Instead of requiring human intervention with traditional payment methods, an AI agent with a crypto wallet can pay for historical or real-time weather data on-demand.

This capability unlocks new use cases around prediction markets, weather derivatives, and parametric insurance—all operating on-chain with minimal human intervention.

As synthetic content proliferates, the value of verifiable real-world data increases. AI systems need trusted inputs, and the cryptographic proofs that DePIN networks provide become essential infrastructure.

The compounding effect

These developments create a compounding effect. Shared protocols reduce coordination costs by eliminating integration friction. Transparent incentive alignment drives up data quality across networks. Participants capture direct value from resources they already own.

The models discussed show machine data evolving from siloed exhaust to a trusted, priced asset that enterprises consume for real business applications.

Decentralized Storage Alliance opens our Group Calls for technical discussions on AI, DePIN, storage, and decentralization. If you're building in this space, we'd welcome your participation in our ongoing research and community initiatives.

Keep in Touch

Follow DSA on X: @_DSAlliance

Follow DSA on LinkedIn: Decentralized Storage Alliance

Become a DSA member: dsalliance.io

What the Tragedy at the Library of Alexandria Tells Us About The Importance of Decentralized Storage

Alexandria, 48 BCE. In the chaos of Caesar's siege, flames meant to block enemy ships licked inland, reaching the Library's waterfront storehouses. Papyrus burst like dry leaves; ink rose as black smoke. Thousands of scrolls that covered topics ranging from geometry, medicine, and poetry vanished before dawn. One blaze, one building, and centuries of knowledge were ash.

The lesson landed hard and fast: put the world’s memory in a single vault, and a single spark can erase it.

Why the Library was a Beacon for the World’s Minds

Alexandria's Library powered the Mediterranean’s information network. Scholars and traders from Persia, India, and Carthage streamed through its gates. Inside, scribes translated and recopied every scroll they touched. India spoke to Athens; Babylon debated Egypt, all under one roof.

Estimates place the collection anywhere between forty thousand and four hundred thousand scrolls. However, the raw tally matters less than the ambition: to gather everything humankind had written and make it conversant in one place. Alexandria became the Silicon Valley of the Hellenistic world. Euclid refined his Elements here; Eratosthenes measured Earth’s circumference within a handful of miles; physicians mapped nerves while dramatists perfected tragedy. Each scroll was a neural thread in a vast, centralized mind—alive only so long as that single body endured.

How Did Centralization Fail?

All knowledge sat in one building. Four separate forces struck it. Each force alone hurt the Library; together they emptied every shelf.

1. War and Fire

One building held the archive, and one battle lit the match. When Caesar's ships burned, sparks crossed the quay, and scrolls turned to soot in hours.

2. Power Shifts

Rulers changed; priorities flipped. Each new regime cut funds or seized rooms for soldiers. Knowledge depended on politics, not purpose.

3. Ideological Purges

Later bishops saw pagan danger in Greek science. Statues fell first, then shelves. Scrolls that clashed with doctrine vanished by decree.

4. Simple Neglect

No fire is needed when roofs leak. Papyrus molds, ink fades. Without steady upkeep, even genius crumbles to dust.

Digital Fires Happening Today

So what has been happening in modern times that reminds us of the Library of Alexandria? A few examples come to mind.

On 12 June 2025, a misconfigured network update at Google Cloud cascaded through the wider internet. Cloudflare's edge fell over, Azure regions stalled, and even AWS traffic spiked with errors as routing tables flapped. Music streams stopped mid-song, banking dashboards froze, newsroom CMSs blinked "503." One typo inside one provider turned millions of screens blank for hours. This was proof that a single technical spark can still torch a vast, centralised stack.

Just three days earlier, on 9 June 2025, Google emailed Maps users about a different erasure notice: users must export their cloud-stored Location Users should keep any data older than 90 days in their history, or it will be deleted. Years of commutes, holiday trails, and personal memories just vanished. The decision sits with one company; the burden of preservation falls on each user.

Then in July 2025, a Human Rights Watch report detailed how Russian authorities had “doubled down on censorship,” blocking thousands of sites and throttling VPNs to tighten state control of the national internet. What a citizen can read now hinges on a government flip-switch, not on the value of the data.

Today's Solution to Alexandria is On-Chain

To shield tomorrow's knowledge, there are three key layers three complementary systems.

The first layer is IPFS. This scatters every file across a network of peers, allowing any node to serve the content through its cryptographic hash, transforming the network into a digital bookshelf. On that foundation lies Arweave. Paying once ensures the data gets etched into a ledger designed to outlast budgets, ownership changes, and hardware refresh cycles.

Finally, Filecoin provides ongoing accountability. Storage providers earn tokens only if there is continual proof that the bytes remain intact. Together, redundancy, permanence, and incentives ensure that an outage, a policy change, or a balance-sheet crunch becomes a nuisance instead of a catastrophe.

The Lesson in Hindsight

Alexandria burned because the best minds of its age had no alternative. Scrolls were physical, copies were scarce, and distance was measured in months at sea. Today, we live in a different century with better tools. If knowledge still disappears, it is by our choice, not our limits.

Every outage, purge, or blackout we suffer is a warning flare: the old risks are back, just wearing digital clothes. However, the cures already run in the background of the internet we use daily. We decide whether to rely on single servers or scatter our memories across many.

While this historic event is something of the past, the lessons we can learn from it are ever more present in today’s society. The idea of decentralized storage may pass you, but when applied to the story of Alexandria, it’s hard not to ignore.

Keep In Touch

Follow us on X: https://x.com/_DSAlliance

Follow us on LinkedIn: https://www.linkedin.com/company/decentralized-storage-alliance

Join DSA: https://www.dsalliance.io/members

The Rapidly Changing Landscape of Compute and Storage

Why Decentralized Solutions Make More and More Sense

The Decentralized Storage Alliance presented a panel in June alongside Filecoin Foundation featuring some of the top names in the decentralized physical infrastructure space (DePIN), including representatives from Eigen Foundation, Titan Network and IO.net.

The panelists were Robert Drost, CEO and Executive Director, Eigen Foundation, Konstantin Tkachuk, Chief Strategy Officer and Co-Founder, Titan Network, and MaryAnn Wofford, VP of Revenue, at IO.net, moderators by DSA’s Ken Fromm and Stuart Berman, a Startup Advisor and Filecoin network expert. The event was hosted by the DSA and the Filecoin Foundation.

One of the points made early in the discussion was about the growing consensus on what the nature of DePIN is and why it’s so important. DePIN approaches not only move governance of compute and storage resources to the most fundamental levels, they also create global markets for unused and untapped compute and storage. These resources can be within existing data centers, but even in consumer and mobile devices.

Panelists were also quick to agree that the benefits of these new approaches are being realized right now with exceedingly tangible results. Instead of traction being far off in the distant future, it was clear that developers are using DePIN infrastructure now and benefiting from it.

Other topics explored included their strategies for gaining institutional adoption as well as their visions of DePIN in the future. Here are some highlights of the conversation.

On The Benefits of Their Solutions

Robert Drost, Eigen Foundation

EigenLayer has three parts to its marketplace that allows ETH and any ERC 20 holder to restate their tokens in EigenLayer and direct it towards any DePIN as well as any cloud project that wants to have elastic security and ultimately be able to deploy not just decentralized infrastructure but verifiable infrastructure.

By restaking tokens and securing multiple networks, users create a shared security ecosystem that allows for the permissionless development of new trust-minimized services built on top of Ethereum's security foundation.

MaryAnn Wofford, IO.net

IO.net hosts 29 of the biggest open source models on our platform through one central API. That's one build that you have to have, and then all of a sudden you have all of these various models that you could test your applications with. We provide free tokens to gain access to models. We'll give you a certain amount a day and with some models like DeepSeek, we'll give you a million.

IO.net is one of the unique players that allows you to pay in crypto as well as gain rewards for doing so. For example, many organizations that have excess capacity with GPUs can post these to IO.net. They can say that their GPUs are available and they will get a reward from us because we are leveraging the Solana crypto network to support resources.

This decentralized approach gets you away from single siloed vendors who want to take over the entire marketplace and be defined as early winners. At the same time, we give the power to developers to create new alternatives, new options, new functionality, and bring new things to market, which is what we all live for and want to bring to our daily lives.

Konstantin Tkachuk, Titan Network

Titan Network is a DePIN network building an open source incentive layer that supports people across the globe to aggregate idle resources and power process globally connected cloud infrastructure. Essentially we help people to share data idle resources and we help enterprises to leverage those idle resources for their benefit at a global scale.

A powerful feature of the Titan Network is the ability to return the power to people to benefit from the growth of AI storage, compute, and beyond with the devices that they already have and they already own. We have this ability to share your resources, whether you have a personal computer that you want to share – which is how Bitcoin allowed you to do so in the early days – to providing your mobile phone for Titan Network applications to run TikTok and other CDN-like services right from your device.

On the Need for DePIN

Robert Drost, Eigen Foundation

DePIN is a whole re-imagining of what the public cloud looks like. The public cloud is something that in many ways looks decentralized but has actually become pretty centralized in terms of the governance and the control as well as the API software stack that AWS, Azure, and Google Cloud offer.

It's part of the reason why it's so hard for somebody to write software once and deploy it on all three clouds. It's also led to a lot of pricing inefficiency and also led to data being stuck in certain clouds. All of the cloud providers love data to go in for free and then leaving the cloud costs about $40 per terabyte for bandwidth, which some people have estimated in the US is approaching a 99% gross margin, meaning that it's a hundred times the raw cost.

DePIN allows us to actually move the governance all the way down to the fundamental level. It opens up the ability for anyone to run hardware using great technology like IO.net on the AI compute side and Filecon on the storage side. Blockchains give us a lot of flexibility in the ability to create and run almost any possible software to replace the current infrastructure stack – and ultimately the platform-as-a-service and software-as-a-service stacks – to one unified one across all clouds, including the big three. It's actually one big cloud of compute and storage.

MaryAnn Wofford, IO.net

To add to this, DePIN is a compute resource that is truly global because you're not really tied to a particular data center. The workloads for AI inference, for example, can be served to your end users at the point of access of where they're at.

That's one of the key things people come to us for – the ability to be agnostic and to be highly flexible in terms of compute resources. You're not dedicated to long-term contracts and you're not dedicated to one particular region. There's just a tremendous amount of flexibility as to where the access point of compute lies, creating a wider marketplace for compute resources.

Konstantin Tkachuk, Titan Network

I would also like to add that we often forget in the DePIN space that a large supplier of resources is average people around the globe getting the opportunity to share and benefit from the infrastructure that they already own. These physical devices, computers, data center-grade infrastructure, and anything in between can now be shared and the people who own these devices can get a benefit from it.

So all of this infrastructure is part of a movement that enables people around the globe to start contributing and be part of this value creation loop. Whether we talk about AI, storage, or compute – all of the amazing developments that are happening – people are able to share their resources and companies are able to benefit from this infrastructure. This is a fascinating movement that brings back the power from centralized data center-grade complexities to just people around the globe.

On DePIN Success Stories and Traction in the Market

Robert Drost, Eigen Foundation

EigenLayer is very much a channel partner play that includes B2B2C as well as B2B-to-Institutions. We have increasing relationships directly with public cloud providers. This means we're integrating and supporting their software stack. It also means that these major cloud providers are becoming operators inside of EigenLayer. We have over a thousand – many of them serious data center providers – who are happy to operate on the networks.

I think the misconception about it is that decentralized physical infrastructure means that we would not have the big three cloud providers. They can totally play in the market, they just have to actually operate and run nodes. And with EigenLayer we're seeing that we actually have operators being run by the cloud providers themselves for various [web3] protocols.

MaryAnn Wofford, IO.net

We are seeing a number of really key wins, particularly in multimodal AI applications specifically on the inference side of things – which we feel is a major use case going forward. We're working with a number of vendors who are building their own models – such as voice and imaging models – who want to make sure they're serving these in a timely manner to their end users.

We are seeing millisecond responses in terms of inference with these models and in other interactions. We know that we're just at the tip of the spear in terms of what we're able to do with AI applications and serving up multi-model capabilities, but [this use and its responsiveness] stands out as one of the key use cases we're seeing with the DePIN infrastructure that we have.

Konstantin Tkachuk, Titan Network

The biggest successes that we find is that we're enabling enterprise customers of traditional web2 services such as content delivery networks and/or compute and storage services to really benefit from the infrastructure we are aggregating at a community level. This really shows the potential of distributed DePIN systems in general where infrastructure is crowdsourced.

For example, we're actively working with TikTok in Asia and enabling TikTok to save more than 70% of their CDM cost savings, just from the pure perspective that they are connecting to user devices and using these devices as CDN nodes to share the content in Asia. This capability is possible due to our coordination layer which brings a level of transparency and a reward infrastructure all the while maintaining performance at a level, and sometimes even better, than traditional cloud infrastructure.

This is where we see a lot of shift compared to previous cycles where blockchain was perceived as something slower or more complicated to use compared to traditional cloud. I think now we are at the inflection point of blockchain where we can do the same things with the same quality or even better due to some new features that are enabled by the blockchain primitives.

On Gaining Institutional Adoption

Robert Drost, Eigen Foundation

Our success with pulling in very important platforms-as-a-service and software-as-a-service middleware means that developers are able to much more rapidly build and deploy decentralized applications.

It means that over time when we're looking at the transition, we will actually see major software providers, software vendors such as Snowflake, CloudFlare, and others will start supporting and deploying their software entirely using blockchain middleware.

It's going to inject real world revenue on the financial side of blockchain, complementing real world assets. If you look at the revenue in the public cloud space, you can see citations on the big three US public clouds of many hundreds of billions of dollars of revenue. If you add in the software and others, you start looking at trillions of revenue that's being run in the infrastructure space. Just imagine all of that being settled via tokens in the crypto space.

MaryAnn Wofford, IO.net

Our ideal customers are the developers in small teams who are testing new applications, testing new capabilities – these exist inside of enterprises and big industries.

We interact a lot with the innovation lab side of big enterprises. We interact with test and dev groups. Although we're new, there are pockets within enterprise organizations that need speed, that need a really fast way to get a project up and running to prove value to the senior executive teams without significant exposure.

We saw this working recently with a couple of oil and gas companies where they wanted to build out test models for some applications they were thinking about, and they just wanted to move quickly. They didn't want to go through the internal process of getting approval.

This is just one aspect but our true North Star and the type of profile that we want to grow our business on is really these types of developers, the small teams that want to innovate and deliver quickly and then make sure that they can continue to grow their applications and ideas out.

Konstantin Tkachuk, Titan Network

What really shines and what really helped us to capture this institutional adoption was focusing on providing the service for an enterprise that's complete and whole. The web3 space made a little bit of a mistake in the beginning where we tried to provide access to one specific resource and weren’t solving the customer problem as a whole.

Now we are all maturing and figuring out that customers need complete solutions. They don't want to figure out how to do the integration. They want to just get the service and run with it. We are at this level where we're starting to provide services to those who just need it [as is] as well as providing additional functionality on top of it for those who want to build their own infrastructure and solutions by themselves.

More and more teams will see the value in using infrastructure that is functional and will deliver more value while also being cheaper or designed in a different way. The way we are building our infrastructure is that the end user of our partners should never know whether they use traditional cloud or DePIN infrastructure.

Stuart Berman, Filecoin Network Expert

Providers like Filecoin have massive capacity. We have exabytes of data that we store in a fully decentralized fashion. Anybody can spin up a node and start storing data or it can be a client and start using Filecoin services from any one of or multiple providers across the world, irrespective of location. You can specify location or say I don't care where it's located.

Moving forward is going to take a bit of effort to get us to this vision of what we're talking about, which is that users shouldn't really care about the underlying technology, but they should care about its capabilities, its features and their ability to control systems, monetize their data, and get the most amount of value out of the information that they either own and control or are part of some ecosystem.

Public data doesn't have severe requirements around privacy and so access control is a good place to start and that's where we've made a lot of inroads, but there's a lot of work to be done to get us there where the vision of full decentralization and the promises it brings get us there.

On The Impact of DePIN on the Future

Robert Drost, Eigen Foundation

EigenLayer also supports things that you don't have in the regular web2 space, such as Proof of Machinehood, which is an analog to Proof of Humanity. For example, there was an AI coding project recently where it came out that it was all a fraud. There were 700 engineers pretending to be AI models building applications. Verifying that there's a real AI model running versus a human is actually a valuable thing.

[One of our partners] supports another great proof which is Proof of Location. You can actually see that your protocols are running across all the continents or you can provide benefits and higher rewards for people who are supporting your protocol in different parts of the world.

We also support something called “applicable security” where if there is a violation of the decentralized protocol, the stakers funds are not just burned, they are given to the participants. You can actually have what looks a little bit like insurance – although it's really more like legal restitution in that if you have losses then you will receive compensatory funds for what you lost.

MaryAnn Wofford, IO.net

Decentralization of compute and storage gives developers the tools to unlock innovation. They have certainly been siloed into a couple of players benefiting, so we could count them on our hands of the players that really have driven the recent economic model for software development.

It's who really won that battle in terms of the VCs, the hyperscalers, etc. Decentralized infrastructure provides the ability to create new things. It will provide the scale that's going to be needed with a very low cost upfront investment in order to test new capabilities such as AI models.

Konstantin Tkachuk, Titan Network

The opportunities to allow people to benefit from the growth around them is an incredible feature. Having the ability to bring people back into the growth loop on a societal level will help offset all of those fears of AI replacing them or something else replacing them.

Now they can provide services to AI, which pays them on a daily basis for running these services. This powerful inclusion of people back into the loop is something that we'll see a lot of appreciation and value, especially if we can communicate this well on various channels and levels.

Stuart Berman, Filecoin Network Expert

The core aspect of decentralization is user control. It's not entirely about distribution – it's about who can set parameters, who can set prices, who can decide who can see my data, who can process it, who can store it.

This is critical and something we've seen this in the decentralized storage space is we've gone from storing data to being able to do compute-over-data. Ultimately what are people getting out of this in terms of their value for their information?

I don't think of smart contracts as much technically as I do in terms of it being a business agreement or a personal agreement in a true legal contractual sense. You can use this data for that and I can control who has access to it and how often they have access to it and for what purposes they have access to it.

We're even seeing regulations now being developed in particular around Data as an Asset class, which gives you the owner of the data, the information-specific legal rights versus the other way around today – which is you turn the data over to somebody and they seem to possess rights to it.

Berlin Decentralized Media Summit, June 2025

The Berlin Decentralized Media event was held over two days at two different venues with Friday night's session hosted by Publix, a centre for journalism in Berlin, while Saturday morning was in the Berlin "Web3 Hub" space, thanks to Bitvavo.

On Friday MC and co-organizer Arikia Millikan introduced the event, the topic (she has been working in the space for a number of years), and keynote speaker Christine Mohan, whose CV ranges across names such as decentralized journalism startup Civil, the New York Times and the Wall Street Journal. She talked about the need for decentralization before journalism is hollowed out by a combination of budget constraints and the replacement of real investigation by increasingly syndicated content produced with little regard to its value as information for readers, the priority being to drive engagement with platforms at low cost.

The theme was expanded in a panel, where she was joined by Sonia Peteranderl and Wafaa Albadry, along with the airing of concerns regarding trustworthiness of content, the persistence of information, and increasing censorship. Setting the stage for the next day's discussion might have left the audience feeling bleak, but along with the panelists' own work, Olas' Ciaran Murray, and Filecoin's Jordan Fahle talked to us about some of the decentralized storage tools and techniques that are available to help address these issues.

The dinner was well set up for ongoing conversations, with some prompting from Miho and Paul on a range of relevant topics. Conversations inside and outside in Publix' garden and passionate discussions were visibly appreciated by the participants. Themes covered the gamut of issues introduced, as the open-ended and informal setting sowed the seeds of ongoing collaboration over a plate and a drink.

Saturday was a more substantial set of talks, panels, and sponsor presentations, addressing in turn various aspects in more depth. After being welcomed and introduced to the Web3 Hub, Filecoin's Jordan Fahle talked about how fast content disappears from the Web (often within a few years), and how Filecoin's content-addressing model of blockchain-backed decentralized storage can help not only keep content around, but help identify its provenance - a key need for quality journalism.

The day's first panel was presented by Arkadiy Kukarin of Internet Archive, River Davis of Titan, and Alex Fischer of Permaweb Journal, exploring preservation: how to ensure information doesn’t disappear from the Web, in a modern-day equivalent of burning of the library of Alexandria all too common a decision about ongoing payment for centralized cloud-based storage. The discussion revolved around censorship-resistance as well as the simple ability to protect information long-term that is a direct consequence of well-designed decentralisation protocols, but also the place of metadata in enabling persistence in a decentralized environment and the tradeoffs required because storage isn't in some magical cloud but is always in the end on physical devices in real places.

Security expert Kirill Pimenov then discussed messaging applications and their security properties, dissecting the landscape and the depressing reality of common communication systems. As well as high-quality platforms like Matrix and SimpleX, he also noted the need to consider reality - there possibly isn't so much need for concern about someone finding out what you asked your friends to bring to dinner, and it is important to be able to communicate, even if you have to think about how to do so knowing you have low security.

Christine Mohan moderated the next panel, with Liam Kelly of DL News, Journalist and AI specialist Wafaa Albadry, and Justin Shenk of the Open History project. The panel considered issues and changes that come with increasing use of AI tools in journalism. The issues ranged from the ethical use of deepfakes to protect sources to the impact on trust and engagement of AI in the newsroom, but the speakers also noted the crucial role of people and their own skills.

Among presentations describing solutions, Ciaran Murray presented how Olas uses decentralized technology to support better recognition of quality, and matching value flows.

A talk from Electronic Freedom Frontier's Jillian York, along with artist Mathana, explored the issues of censorship in more detail, looking at how media have portrayed, or censored, various issues over many decades. From so-called 1960s counter-culture to contemporary recognition of the rights of marginalised communities today, they elaborated on how decentralization has played an important role enabling the breadth of perspectives to be available and represented, as a bulwark against tendencies to repression of differences, and totalitarianism directing the people instead of the democratic ideal of the inverse situation.

Arikia presented the work of Ctrl+X to decentralise publishing and enable journalists in particular to own and continue to monetize their work, and Matt Tavares of Protocol Labs gave a talk motivated by the collaborative use of OSINT (he has an intelligence background - for the rest of us, that means "data that is available"), and how it isn't properly credited and remunerated over its lifetime, but crucially, should and could be.

A final panel of Matt Tavares, Arikia Millikan and Ciaran Murray considered the tension between privacy and transparency. With the obvious recognition that the topic isn't constrained to the world of Decentralized Media, but one where the needs and rights of the public to access important information particularly where it touches on the powerful interests that seek to regulate, moderate, or benefit from our behaviour, have to be balanced against the ability of individuals to exercise their rights through a basic assumption of privacy.

With that and the thanks for the many people who worked to put it together including the sponsors, the day formally wrapped up. However, once more the conversations continued, spilling into the surrounding spaces, outside, and further. We look forward to the next edition to continue our support for the development of decentralized media, one of the multiple fields where innovative adoptions of Decentralized Storage are bringing concrete benefits.

The DePIN Vision: Insights from Lukasz Magiera

Welcome and thank you for sitting down with us. You have an expansive vision of compute and storage. Can you explain this vision and what drives it?

Lukasz Magiera: My main goal is to make it possible for computers on the Internet to do their own thing and not have to go through permissioned systems if you don't need to. As a user I just want to interact with an infinitely scalable system where I put in money and I get to use its resources. Or I bring resources – meaning I host some computers – and money comes out.

The middle part of this should ideally be some kind of magical thing which handles everything more or less seamlessly. This is one of the main goals of digitalized systems like Filecoin and DePIN – to build this as a black box and depending on who has resources, you establish this really simple way to get and share resources that is much more efficient than having to rely on the old school cloud.

So you're looking not only at the democratization of compute and storage but also increasing the simplicity of using it?

Lukasz Magiera: There are a whole bunch of goals but all of them are around this kind of theme. There is the ability to easily bring in resources into the system to earn from those resources. Then there's the ability to easily tap into these resources.

And then there's more interesting capabilities – let's say I'm building some service or a game and it might have some light monetization features, but I really don't want to care about setting up any of the infrastructure. This is something that the cloud promised but didn't exactly deliver – meaning that scaling and using the cloud still requires you to hire a bunch of people and pay for the services.

The ideal is that you could build a system which is able to pay for itself. The person or the team building some service basically just builds the service and all of the underlying compute and storage is either paid for directly by the client or the service pays for its resources automatically via any fees while giving the rest of its earnings to the team.

It's essentially inventing this different model of building stuff on the Internet whereby you just build a thing, put it into this system, and it runs and pays for itself – or the client pays for it without you having to pay for the resources yourself. In this way, future models of computing will become much more efficient.

That’s certainly a grand vision. What does it take to get there?

Lukasz Magiera: There is a vision but it is, I would say, a distant one. This is not something we will get to at any moment. Filecoin itself took about eight years now to get to where it is and this was with intense development. I know we can get there but this is going to be a long path. I wouldn't expect to have the system I envision that's even approximately close to this in less than 10 years.

THE SOFTWARE BEHIND THE FILECOIN NETWORK

Let’s step back a bit into where we are now. You are the co-creator of Lotus, can you describe it to us?

Lukasz Magiera: Lotus is the blockchain node implementation for the Filecoin network. And so when you think of the Filecoin blockchain, you think mostly about Lotus. It doesn't implement any of the actual infrastructure services, but it does provide all of the consensus to all of the protocols that the storage providers provide.

The interesting thing is that clients [those who are submitting data to the network] don't really have to run the consensus aspect. They only have to follow and interact with the chain – and they would only do this just for settling payments as well as for looking at the chain state to see that their data is still stored correctly.

On the storage provider side, there are many more interactions with the chain. Storage providers are the ones that have to go to the chain and prove that they are still storing data. When they're wanting to store new pieces of data, they have to submit proof for that. Part of the consensus also consists of mining blocks and so they have to participate in that block mining process as well.

Can you explain the difference between Lotus and Curio?

Lukasz Magiera: There are multiple things. Lotus is Lotus and Lotus Miner. Lotus itself is the chain node. Lotus Miner is the miner software that storage providers initially ran. Lotus Miner is now in maintenance mode and Curio is replacing it. Lotus itself is not going away. It is still something that is used by everyone interacting with the Filecoin blockchain.

Lotus Miner is the software that initially implemented all of the Filecoin storage services. When we were developing the Lotus node software, we needed software to test it with – if you’re building blockchain software, then you need some software that mines the blocks according to the rules. This was the genesis of Lotus Miner.

Initially it was all just baked into a single process called Lotus. We then separated that functionality into a separate binary and called it Lotus Miner. The initial version, however, had limited scalability. When we got the first testnet up in 2019, several SPs wanted to scale their workloads to more than just a single computer and so we added this hacky mode to it so it could attach external workers. This was back in the days where sealing a single sector took 20 hours and so there was no consideration of data throughput. Plus scheduling wasn't really an issue because everything would take multiple hours.

Over time, the process got much better and much faster and SPs brought way more hardware online and so we had to rewrite it to make it better and more reliable. We didn't have all that much time and the architecture wasn't all that great but we did a rewrite that was reasonable. It could attach workers and workers could transfer data between them. This is what we launched the Filecoin Mainnet with in 2020.

For a while thereafter, most of the effort was making sure the chain didn’t die and so very little of that effort was put towards making Lotus Miner better. At some point, though, we got the idea, “What if we just rewrite Lotus Miner from scratch – just throw everything away and start fresh.” And so this is how Curio came about. The very early designs started about two years ago. Curio launched in May 2024

Can you describe Curio in more detail?

Lukasz Magiera: Curio is a properly scalable, highly available implementation of Filecoin storage provider services. More broadly, it is a powerful scheduler coupled with this runtime that implements all of the Filecoin protocols needed for being a storage provider. The scheduler is really the thing that sets it apart from Lotus Miner.

It is really simple to implement any DePIN protocol where you have some process running on some set of machines where you need to interact with a blockchain. Basically, it lets us implement protocols like Proof of Data Possession (PDP) in a matter of a week or two. Really the first iteration of PDP took me a week to get running – it went from nothing to being able to store data and to properly submit proofs to the chain.

Is Curio then able to incorporate new and composable services for storage providers to provide?

Lukasz Magiera: Yes. The short version is that if you have a cluster, you just put this Curio thing on every machine – you configure it to connect with each other and the rest figures itself out. The storage provider shouldn't really worry about any of the scheduling stuff and any of the things that the software should do by itself.

If something doesn't work, you have proper alerts. You have a nice web UI where it is easy to see where the problems are. And obviously for the architecture, it is much more fault tolerant where you can essentially pull the plug on any machine and the cluster will still keep running and will just keep serving data to clients.

THE STATE OF DECENTRALIZED STORAGE

Where are we with decentralized storage? What are you and your team building near term and then what does it look like long term?

Lukasz Magiera: Where we are now with Filecoin is we have a fairly solid archival product. It could use some more enterprise features for the more enterprise clients – this is mostly about access control lists (ACLs) and the like.

We just shipped Proof of Data Possession and that should help the larger storage provider. Then you have a whole universe of things we can do with confidential computing as well as developing additional proofs – things that enterprise would want such as proof of data scrubbing, i.e. proving there’s been no bit rot. We can also use the confidential compute structure to do essentially full-blown compute on data. We could be the place to allow clients to execute code on their own data. And this could include GPU-type workloads fairly easily as far as I understand.

On the storage provider side right now, you have to be fairly large. You have to have a data center with a lot of racks in a data center to get any scale and make a profit. You need a whole bunch of JBODs (Just a Bunch Of Disks) with at least a petabyte of raw storage or more to make the hardware pay for itself. You need hardware with GPUs and very expensive servers for creating sectors. The process is much better now with Curio, but we can still do better.

The short term plan from Curio is to establish markets for services so that people don't have to have so many GPUs for processing zk-snarks [for the current form of storage proofs]. Instead, let them buy services for zk-snark processing and sector building so they don’t need these very expensive sealing servers. Where all they really need is just hardware and decent Internet access – and for that they maybe don’t even need a data center at all. And so the short term is enabling smaller scale pieces of the protocol.

Can you talk about these changes in the processing flow for storing data?

Lukasz Magiera: Essentially, we have to separate the heavy CapEx processing involved in creating sectors for storage [from the process of storing the data]. You have storage providers who are okay with putting up more money, but they don't want to lock up their rewards. They just want to sell compute.

This kind of provider would just host GPUs and would sell zk-snarks. Or they would host SuperSeal machines and either create hard drives with sectors that they would ship or they would just send those sectors over the Internet.

Separately you have storage providers who want to host data and just have Internet access and hard drives. The experience here should really just resemble normal proof-of-work mining. You just plug boxes into the network and they just work whether it's with creating sectors or offering up hard drives.

Can you talk more about the new proofs that have been released or that you’re thinking about?

Lukasz Magiera: Sure. Proof of Data Possession (PDP) recently shipped and this is where storage providers essentially host clear text data for clients. The cool thing with PDP is that on a wider level, it means that clients can upload much smaller pieces of data to an SP and we can finally build proper data aggregation services where clients can essentially rent storage that can read, write, maybe even modify data on a storage provider. When they want to take a snapshot of their data, they just make a deal and store it and so this simple proof becomes a much better foundation for better storage products.

We could also fairly easily do attestations around proving there has been no bit rot. We could also do attestations for some data retrieval parameters as well as some data access speeds. Attestations for data transfer over the Internet might be possible but they are also very hard. These things could conceivably feed into an automated allocator so that storage deal-making could happen much more easily.

Some proofs appear easy to do but in reality are hard problems to solve. What are your thoughts here?

Lukasz Magiera: Proof of data deletion is one of those that is mathematically impossible to prove but it is much more possible to achieve within secure enclaves. And so if you assume that a) the client can run code, b) that it can trust the enclave, c) that the provider cannot see or interact with that code, and d) that there is a confidential way to interact with the hard drive – then essentially a data owner could rent a part of a hard drive or an SSD through a secure enclave in a way that only they can read and write to that storage.

It wouldn't be perfect. Storage providers could still throttle access or turn off the servers, but in theory, the secure enclave VM would ensure that read and write operations to the drive are secure and it would ensure that the data goes to the client. So it essentially would be possible to build something where the clients would be able to get some level of attestation of data deletion because essentially the SP wouldn't be able to read the data anyways. In this case you literally have to trust hardware, there's no way around it. You have to trust the CPU and you have to trust the firmware on the drive.

And so as Filecoin grows and adds new protocols and new proofs, can these be included in the network much more easily now because of Curio’s architecture?

Lukasz Magiera: Yes, essentially it is just a platform for us to ship capabilities to storage providers very quickly. If there are L2s that require custom software, it's possible then to work with these L2s to ship their runtimes directly inside of Curio. If an SP wants to participate in some L2s network, then they just check another checkbox or maybe put in some lines of configuration and immediately they could start making money without even ever having to install or move around any hardware.

CLOSING THOUGHTS

Any final thoughts or issues you’d like to talk about?

Lukasz Magiera: Yes, I would love to talk about the whole client side of things with Filecoin and other DePIN networks. Curio is really good on the storage provider side of things but I feel like we don’t have a Curio-style project on the client side yet. There are a bunch of clients but I don't think any of them go far enough in rethinking what the client experience could be on Filecoin and DePIN in general.

Most of the clients are still stuck in the more base level ways of interacting with the Filecoin network. You put files into sectors and track them but it feels like we could do much better. Even basic things like support for erasure coding properly would be a pretty big win. Having the ability to have 10% overhead but have some redundancy. All of this coupled with just better, more scalable software.

As a large client, I would just want to put a virtual appliance in my environment and have it be also highly available and be able to talk to Filecoin through a more familiar interface that is much more like object storage.

MetaMe joins Decentralized Storage Alliance

“The DSA is proud to welcome MetaMe into our community of innovators shaping the future of digital sovereignty. Under Dele's visionary leadership, MetaMe is redefining personal data ownership, advancing solutions that empower individuals and uphold the fundamental rights of users worldwide. We look forward to working together to foster open standards, decentralized infrastructure, and a more equitable digital economy.”

Stefaan Vervaet, President, Decentralized Storage Alliance

The Decentralized Storage Alliance (DSA) is thrilled to welcome MetaMe to our global network of pioneering organizations building the future of decentralized infrastructure. MetaMe, founded and led by visionary entrepreneur Dele Atanda, is reshaping how personal data is owned, controlled, and valued in the digital age. In a world where user information is routinely harvested and monetized without true consent, MetaMe offers a powerful alternative: a decentralized, self-sovereign personal data management platform where individuals are in charge of their own digital lives.

At a time when decentralized storage is becoming the foundation for a more secure and equitable internet, MetaMe’s mission perfectly aligns with the DSA’s vision of open standards, user empowerment, and innovation. Together, we are advancing technologies that protect individuals' rights while enabling new forms of ownership and value creation.

Dele Atanda brings a uniquely impressive background to this work. Over the course of his career, he has led major digital transformation initiatives for Fortune 100 companies such as Diageo, BAT, and IBM. He served as an advisor to the United Nations’ AI for Good initiative, authored the influential book The Digitterian Tsunami, and continues to be recognized as a thought leader at the intersection of AI, blockchain, digital rights, and ethical innovation.

Dele’s work is rooted in a deep belief: that technology should serve humanity — not the other way around. His leadership at MetaMe reflects this commitment, building a future where digital freedom and dignity are foundational principles, not afterthoughts.

By joining the DSA, MetaMe strengthens a movement toward a decentralized, user-owned internet where data is secure, portable, and empowering for everyone. We are excited to collaborate with Dele and the MetaMe team as we push the boundaries of what’s possible for personal data sovereignty, decentralized storage, and digital empowerment.

Please join us in welcoming MetaMe to the Decentralized Storage Alliance!

Learn more about their work at metame.com.

The future of storage is decentralized, and we’re just getting started.

Decentralized Media: The Future of Truth in a Trustless Web

'Traditional media is broken. We need to rethink how media works and rebuild this industry from the ground up. I think blockchains and crypto can be tools to create new business models that incentivize quality journalism, with censorship resistance infrastructure and payment rails.’

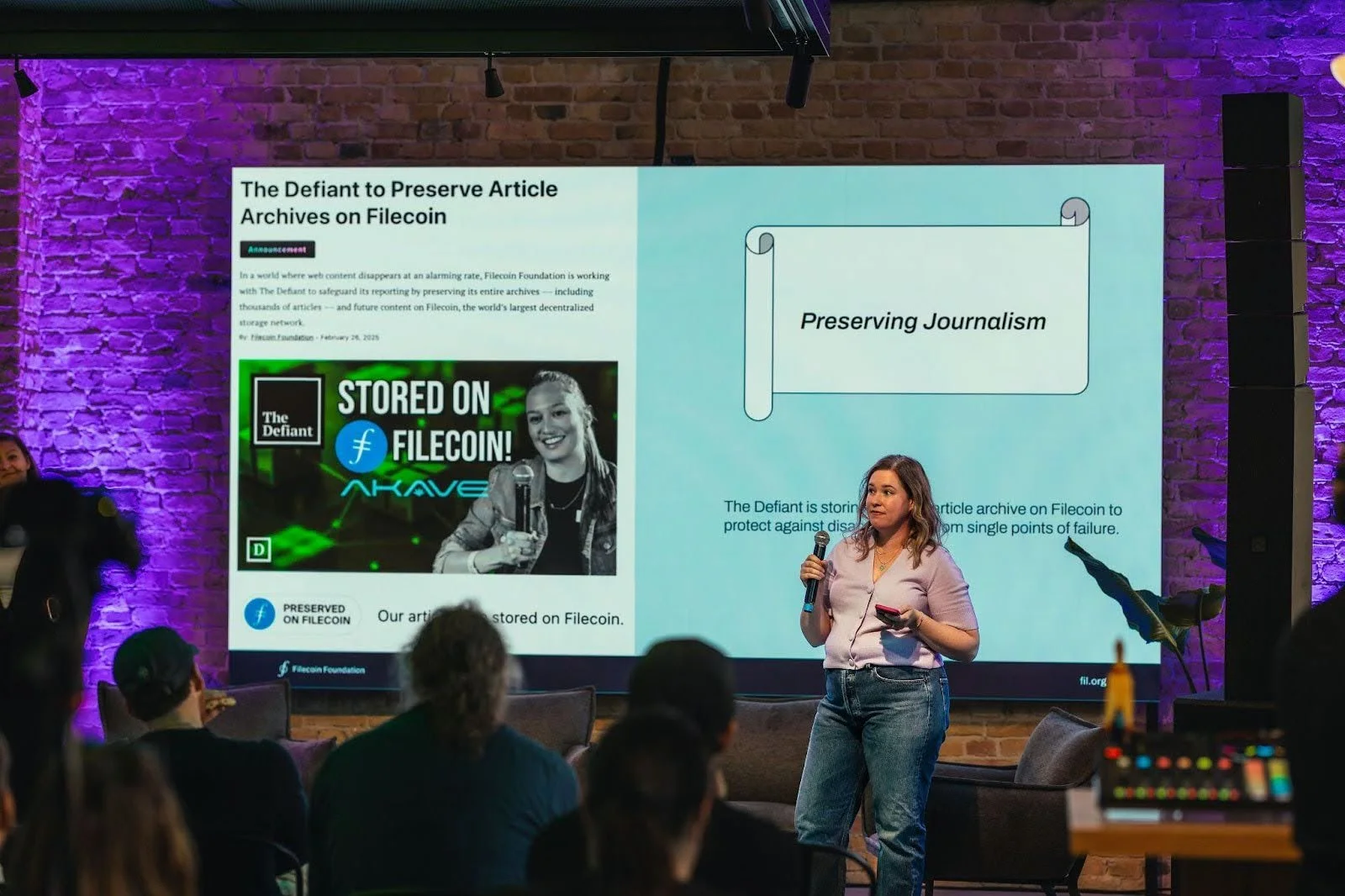

Camila Russo, Founder, The Defiant.

The DSA and The Defiant teamed up on April 22nd for a lively X Space on Decentralized Media. This X Space brought together voices from The Defiant, Filecoin, CTRL+ X, and Akave to explore topics that included the importance of censorship resistance in journalism, how blockchain and decentralized storage can preserve truth, the role of AI in content creation and distribution, and the vision for a more sovereign internet. The discussion was led by Valeria Kholostenko Strategic Advisor (DSA), Cami Russo (The Defiant), Arikia (CTRL+X), Clara Tsao (Filecoin Foundation), and Daniel Leon (Akave), who envisioned new economic models all under the banner of 'The Death of Traditional Media and the Birth of a New Model.'

When asked on X, Cami Russo responded: 'We’re all just pawns in Google’s land.' That stark reality framed much of the discussion. Journalism, she argued, has become beholden to SEO-chasing clicks rather than truth. 'We need to fundamentally rethink how we operate. Crypto and blockchains must be part of how we rebuild.'

Arikia echoed that sentiment with a journalist’s weary wisdom, 'I’ve seen thousands of articles go offline, irretrievable.' Centralized platforms purge content in redesigns, or lose it through sheer neglect. The promise of blockchain? A world where writers, not platforms, are the custodians of their work.

That promise is no longer theoretical. Just last month, The Defiant officially partnered with Akave and Filecoin to migrate its full archive to decentralized storage. The collaboration is a milestone in media resilience, ensuring that every article past, present, and future remains tamper-proof, censorship-resistant, and permanently accessible.

This move means that even if traditional cloud providers fail, crash, or cave to pressure, The Defiant’s journalism lives on. As Daniel Leon explained, 'Now, even something like an Amazon outage can’t take The Defiant offline.' It’s not just about preservation, it’s about protecting the fourth estate from a malignant future.

This partnership demonstrates how Decentralized Storage can empower media orgs to move from dependency to sovereignty. From now on, The Defiant controls its own archive and more importantly, its own destiny.

Storage as Resistance

Clara Tsao dropped the stats, 'Trust in traditional media is at historic lows, only 31% of U.S. adults, and a paltry 12% of Republicans express confidence in it. The solution, she says, lies in 'verifiable, censorship-resistant systems.' Filecoin, the world’s largest decentralized storage network, has worked to secure everything from the Panama Papers to AI training datasets from CERN.

Daniel Leon brought it home, 'A quarter of articles published between 2013 and 2023 are gone.' With The Defiant now preserved on Filecoin via Akave, that content has a digital afterlife. 'This isn’t just about decentralization for decentralization’s sake. It’s about resilience. It’s about control.'

Micropayments, DAOs, and the New Economics of Journalism

If we’re going to rebuild the media, we need to rethink how we fund it. Russo pitched a decentralized newsroom part DAO, part think tank powered by token incentives. Arikia’s CTRL+X is already building the tools: NFT-based licensing, seamless micropayments, and content ownership that aligns with a journalist’s rights, not the platform’s terms of service.

'The era of ‘just do it for exposure’ is over,'she said. Adding to existing models of publishing content for free, or requiring subscription to an entire service just to read one document, 'Web3 offers an option: pay-per-article access. No more caste system where only the wealthy can read good journalism.'

AI: Frenemy or Fixer?

AI loomed large in the conversation, both as a tool and a threat. 'We’ve had a great experience with AI at The Defiant,' said Russo, noting how it handles routine stories so journalists can focus on deep dives. But she’s wary of the downside. 'If chatbots aren’t attributing their sources, that’s theft. Plain and simple.'

Tsao emphasized AI's reliance on trustworthy data. 'Blockchain lets us verify data provenance. Without that, AI becomes a house of cards.' She cited Filecoin’s role in archiving wartime journalism in Ukraine, proving that authenticity is not just a buzzword but a matter of international justice.

What Comes Next: DJs, Berlin, and Bridging the Gap

The X Space ended with a nod toward the future, namely the upcoming Decentralized Media Summit in Berlin.

Valeria asked: What voices are we still missing?

Cami Russo suggested bringing in traditional media leaders outside the Web3 bubble. Daniel Leon noted the shift happening: 'Two years ago, 'blockchain storage' was a red flag. Now, companies are curious. We’re at an inflection point.'

Arikia fully channeled Berlin energy, 'We need DJs. Musicians have been fighting for decentralization for longer than we have. They’ve got the scars and the stories.' Luckily, the leading culture DAO Refraction has you covered. As part of the broader social venture movement alongside projects like Farcaster, Lens, and others reimagining digital culture, Refraction bridges music, media, and Web3. It’s not just about vibes, it’s about building resilient creative ecosystems that can’t be muted or monetized by middlemen.

The Verdict? Decentralization is the Only Way Through

As institutions crumble and AI rewrites the rules of content creation, one thing is clear: the old model is broken. But from Filecoin’s storage rails to CTRL+X’s monetization tools to The Defiant’s AI-enhanced reporting, the scaffolding of a new system is already here.

As Valeria closed the session, she left us with a challenge, not a conclusion.

'The fight for truth is just beginning.'

DSA updates Data Storage Agreement Template

The DSA today published an update for its Data Storage Agreement template.

Developed by the TA/CA Working Group, the template provides a standard form agreement for data storage, that can be customized to match the specific parameters of a Storage Deal.

The update adds agreed service levels and costs to the template, enabling a single agreement to cover the needs of a wide range of storage customers, including Layer 2 providers both as a Storage provider for a customer and as a customer of specific Storage Providers fulfilling part of their Storage needs.

The template is available as a Google Doc that enables comments, and the DSA welcomes feedback from users of the document that we can use to update the template in future to meet users’ needs even better. To create a new agreement, make a copy of the document.

It is also available for download as a Word Document, that can be edited, converted into other formats and signed by the relevant parties.

Decentralized AI Society and Web3 Organizations Join DSA

In a mark of how the AI industry’s insatiable appetite for data is fueling interest in innovative, dynamic, and cost-effective approaches to securely storing information, the Decentralized Storage Alliance (DSA) today welcomes six new members. The Decentralized AI Society (DAIS), Akave, DeStor, Seal Storage, Curio, and Titan Network have been added to DSA’s growing, global network of leading software, hardware, and solution providers committed to advancing decentralized storage solutions for enterprise.

These companies join founding members AMD, Seagate, Ernst & Young, Protocol Labs, and Filecoin Foundation (FF) in the DSA’s mission to empower enterprises to leverage decentralized data storage and processing.

The Holon Data Report estimates that over 75,000 zettabytes (ZiB) of data will be created by 2040, mostly powered by the AI industry’s needs for machine-learning and inference. That’s an exponential expansion –– 1000x larger than all data generated in 2020.

The need for storage solutions has never been greater, yet centralized storage providers continue to consolidate the market, limiting data efficiency and pricing. Decentralized storage offers more efficient, robust, and secure services at a better value, creating a space where businesses – of any size – can thrive.

There’s also a growing awareness that if humanity is to enjoy a healthy AI future, people and businesses will need direct control over their data, a framework that will give rise to new business models and monetization opportunities. But that will require verifiable proof of ownership, which, in turn, will demand the presence of reliable, persistent storage infrastructure that bears none of the centralized model’s single-point-of-failure vulnerabilities.

“As our AI-dominated future approaches, it is vital for our shared prosperity and wellbeing that no single storage provider has gatekeeping privileges over the data and computational capacity that will power it,” said Michael Casey, Chairman of the Decentralized AI Society. “In forging ties to the mainstream corporate enterprises that are building that future, the Decentralized Storage Alliance is doing vital work. DAIS is proud to partner with this game-changing community.”

Through collaboration between Web2 and Web3 leaders, DSA aims to create a thriving ecosystem for decentralized data storage, helping businesses integrate decentralized storage networks like Filecoin and other Web3 technologies. Filecoin is a decentralized network that stores files with built-in economic incentives to ensure that data is stored reliably over time and makes decentralized storage – at scale – a real possibility. Additionally, the Filecoin network ensures that data is reliably stored through distributed nodes globally, reducing risks associated with centralized control.

Looking ahead, DSA aims to support enterprises in overcoming challenges related to data integrity, sovereignty, and lock-in while providing a more secure, cost-effective alternative to centralized storage. The DSA is currently focused on developing specifications for Service Level Agreements (SLAs) and access controls (ACLs). This will build on the group’s previous accomplishments since its 2022 launch:

New reference architectures: DSA released a storage reference architecture for the Filecoin network to reduce compute infrastructure while increasing data integrity and network resiliency making it easier to onboard commercial-grade storage loads to the network. DSA also released a sealing reference architecture built with Supermicro with benchmark results showing a 6X increase in throughput as well as cost savings.

Advancements in zero-knowledge proof (zk proofs) processing that optimize several key steps in the Filecoin network proof process via CPU and GPU optimizations, as well as throughout the Web3 infrastructure stack. The result is an 80% reduction in cost and latency for proof generation – saving Filecoin storage providers money, and increasing flexibility in how they use their hardware and holds great promise for reducing costs and compute times across the entire Web3 ecosystem.

New open-source software optimizations for the Filecoin network, significantly reducing data onboarding costs by up to 40% and sealing server costs by up to 90%. These network optimizations push forward decentralized storage technologies, advancing the industry and enabling further adoption by enterprises.

DSA is paving the way for a decentralized storage future that empowers enterprises to participate in the rapidly growing data economy.

“Decentralized storage is vital for securing the future data economy. At Akave, we believe in empowering enterprises to take control of their data, managed through on-chain data lakes with verifiable, on-chain transactions. By joining the Decentralized Storage Alliance, we aim to help build a transparent and scalable storage ecosystem, unlocking the full potential of data for everyone,” said Stefaan Vervaet, CEO of Akave.

“As businesses generate and rely on more data than ever, the need for secure, scalable, and cost-effective storage solutions is critical. Joining the Decentralized Storage Alliance aligns with our mission to empower enterprises with innovative, decentralized storage options that offer better security, resilience, and control. We’re excited to collaborate with industry leaders to drive the future of data storage and make decentralized technology accessible for businesses of all sizes.”

Jen King, Co-Founder & CEO of DeStor.

“Collaboration is key to successfully advancing both hybrid and fully decentralized solutions essential to addressing the needs of enterprise, and Seal is thrilled to be part of the DSA in partnership with all of its members.”

Scott Doughman, Chief Business Officer, Seal Storage.

“Decentralized internet data storage is an exciting frontier with a variety of new challenges,” said Andrew Jackson, President, Curio Storage. “These are best overcome together where our varied expertise can find the solutions we each need to get the world heading toward this amazing opportunity for small and large businesses alike. Curio software has from day-1 been lowering the difficulty for SPs to start and to scale. We look forward to building this storage platform with you all.”

"We are excited to join the Decentralized Storage Alliance as it aligns with our mission of empowering communities to become the owners of their digital resources. Decentralized storage solutions hold the potential to revolutionize how data is managed, making it more secure, accessible, and efficient. At Titan Network, we believe that through the aggregation and utilization of idle resources, we can unlock immense value for enterprises and individuals alike. By collaborating with industry leaders within DSA, we aim to bring decentralized infrastructure on par with modern cloud solutions, providing a better, more scalable future for data storage."

Konstantin Tkachuk, CSO and co-founder of Titan Network.

"The Filecoin network is a breakthrough in decentralized data storage, safeguarding humanity's most important information and serving as the backbone for the evolving internet landscape. We look forward to continuing to contribute to the DSA’s accomplishments to advance decentralized storage for enterprises.”

Clara Tsao, a founding officer at Filecoin Foundation.

“Decentralized storage offers immense benefits, including enhanced data security, lower costs, and increased accessibility. The Decentralized AI Society and our other new members bring a wealth of knowledge that will help us push decentralized storage solutions forward and into new markets.”

Daniel Leon, Founding Advisor of the DSA.

Protocol Design in Action: Insights from Irene Giacomelli

Step into the world of cryptography and protocol design with Irene Giacomelli, Protocol Researcher at FilOz, as she shares how she bridges theory and real-world application to tackle the unique challenges of Web3.

Background

We love the title protocol researcher. Can you tell us a bit about your background as well as what a protocol researcher does?

Irene Giacomelli: I came from “theory land” in the sense that I started my job career in academia. I have a master degree in mathematics and PhD in informatics and I spent a few years in academia publishing papers on cryptography before joining the industry. I started with Protocol Labs in 2019. The fun fact is that when I joined it, I didn't know much about blockchain or Web3. I just knew the basic definitions in the space.

I joined to work on the cryptographic aspect of Filecoin – which at that moment in time was still in the design phase. They needed a protocol and cryptographic researcher to understand and overcome the challenge of constructing a blockchain that used useful space as the underlying resource for consensus.

There was no such thing at the time and even today there are few blockchains that use space as a provable resource for consensus. There were people already working on these topics before I joined and I learned a lot from them. I focused on bridging the theory with the real world. Meaning that results from academic papers often need to be adapted to work with the constraints from a real-word protocol like Filecoin. That is a crucial part of being a protocol researcher.

I kept working on Filecoin core protocol even after we launched the network, mainly shipping Filecoin Improvement Proposals (FIPs) and keeping an eye on the security of the network. Now that core protocol is more time-tested, the focus for protocol designers like me has shifted from the initial core work to adding new features and capabilities. We transitioned to where we’re saying, “Let's look at this as a product, as a storage network.” to “What features are needed to increase adoption?” and “What can we build – or even better – what can we unlock so that others can build these types of features for the network?”

The mission of FilOz is to support Filecoin and allow everyone in the ecosystem to be able to use it and improve it. This fits very well with the line of work I was doing. And so in April, I was happy when they asked me to join the team and I was happy to say yes.

DESIGNING Web3 PROTOCOLS

Let's talk about protocols. What makes working with Web3 protocol so challenging?

Irene Giacomelli: There are a few challenges when you try to design a protocol in Web3. One challenge comes from the fact that web3 protocols need to work in the decentralized world. So here you have a bunch of parties that can collude or create Sybil attacks and there is no trusted central authority to fall back on. Which makes for a design challenge because in classic cryptography it's natural to assume that two different entities – two parties in the protocol – won't collude because they have different interests. In decentralized protocols, this is not the case. You cannot assume this and so you need to evaluate all possible attack vectors. Also, more parties means that the design needs to be built to be able to scale, which is not always trivial.

Another difference is how to use crypto incentives – how to use economic incentives the right way.. In classical cryptography, you usually want to prove that something is impossible to happen or that the probability for it to happen is very low. In protocols with economic incentives, it's not always white on black, there are multiple scenarios that can occur and you rank all these using profit functions. This is a challenge but also a resource – incentives can solve problems that classical methods cannot.

And so you are using incentives to solve problems. So can you give examples?

Irene Giacomelli: Filecoin itself is an example of this, right? In general, we have cryptographic proofs that act as a certificate of the service and that can trigger payments. The great idea of Filecoin is linking that to block mining. So now, storage providers can publish proofs that grant them the right to be elected to create a block and earn the related reward.

You might think of doing the same with retrievals using a proof of delivery , but it's actually impossible to do so in classical cryptography. We actually can prove that such a thing like“proof of delivery” is impossible to exist. This is a known limitation in cryptography that is linked to the well-studied fair-exchange problem. However, with the right incentives, we can design a protocol that overcomes this limitation and provides a workable solution.

I worked on this at CryptoNet Lab (Protocol Labs), and the result was retriev.org. This is a retrieval insurance protocol that uses crypto incentives to ensure guaranteed delivery in decentralized storage networks like Filecoin. It’s something that I hope to work more on after a few of these more near-term projects are completed.

What motivates you to do protocol research and design?

Irene Giacomelli: For me in particular it’s to see something that I worked on in theory as a protocol design go live. Let's say I design or co-design a protocol with a set of drawings and instructions, detailing what it should do and how it should work. That’s nice, but what I really care about is that this can go into the dev pipeline and be implemented and be used – so that what I designed really makes an impact.

RECENT WORK

What problems are you working on now, especially when it comes to opening up new opportunities for the network?

Irene Giacomelli: We are working on exactly what you said – opening up new opportunities. In this case we are working on shipping a new proof system. What we have today on Filecoin is a proof system based on two pieces. First we have the PoRep (Proof of Replication) that allows a storage provider to prove that they have your data encoded in an incompressible form. Then we have repeated PoSt (Proof of Spacetime), which allows storage providers to prove that the encoded data is kept stored for a specific amount of time. The incompressibility property is expensive to achieve but it's needed for consensus. Indeed, in Filecoin, consensus power is proportional to the “spacetime” (i.e. space through time) resource that is committed to the network.

We are adding a new proof system that while it doesn't offer incompressibility guarantees and cannot be used for consensus, it is much more efficient to generate and it can still be used to prove data possession to both the network and clients. This allows the Filecoin network to offer different types of storage services and business models.

For example, with the new proof system, storage providers can store data in an unencoded format for fast retrievals, which is not possible (without additional copies) with the current PoRep + PoSt system. This opens up new use cases for the network and new markets for storage providers.

What's this new proof called?

Irene Giacomelli: It’s called PDP – Proof of Data Possession and it is not necessarily a new concept. This has been known in the literature and used in classic client-server storage systems. What we are doing at FilOz is – and this goes back to what we're discussing about challenges for web3 protocols – is taking care of solving the challenges and making this protocol scalable to be shipped via smart contracts on a blockchain and used by applications and other protocols as a native proof.

SOLVING REAL PROBLEMS

What other things excites you about the space? Are there other proofs? Are there other protocol elements that really excite you?

Irene Giacomelli: Retrievability for sure. It's something that I would like to see improved for Filecoin. Another interesting open problem is designing a decentralized protocol for reporting performance metrics without a trusted authority. This could apply to retrievals as well as other services. Typically, a centralized entity tests providers and reports on their performance but decentralization removes this option. In Web3, there is no single entity that is testing.

The members of a network can perform tests but there are few problems with this. For example, how do you handle cases where nodes report different values for the same metric? How can we ensure the network doesn’t collude with providers? So a colleague and I worked on this for a little time with an academic researcher’s help on this. But it's still an open problem and I think it is a really exciting problem for both academic research and practical Web3 solutions.